The evolution of interoperability (part 2)

This is the second post in a two-part series on the evolution of interoperability. Read part one here.

Last time, we looked at how interoperability has changed from the 1980s through the Meaningful Use era. We reached a point where interoperability was mostly event-driven and based on individual connections, and where technical standards focused on specific use cases. Today I'd like to continue our walk through time, but to begin, we'll need to back up a touch to the early 2010s.

The discovery of FHIR

In the Shahnameh, legend has it that a king discovered fire when he threw a stone intending to kill a snake. He missed, but sparks flew when the stone hit another stone. Fire was found, an act celebrated to this day by Zoroastrians worldwide and many in Iran.

The discovery of FHIR is certainly less fanciful but no less consequential. Before FHIR, healthcare lacked a clear-cut standard for exchanging data through modern web-based technologies. The technical components (OAuth, HTTPS, JSON, etc.) were there, but we hadn't turned towards representing data in discrete, interconnected concepts (e.g., patient demographics within a Patient, facility details within a Location, drug information within a Medication).

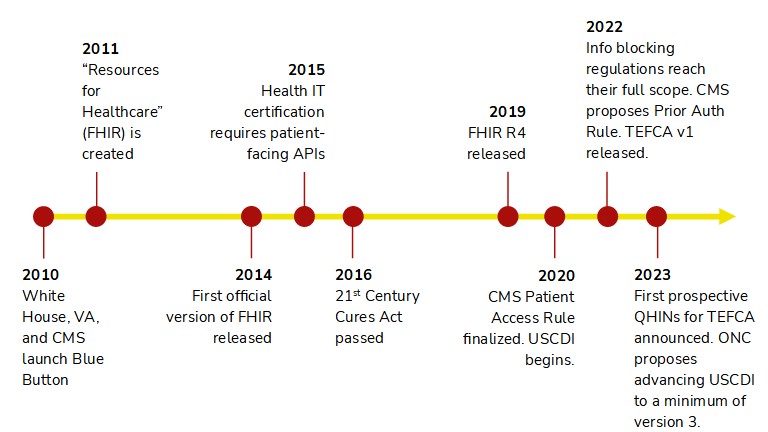

That changed when "Resources for Healthcare" emerged in 2011. Though admittedly immature, we finally had a way to interoperate using a 21st century approach, much like Twitter or Facebook have always worked. FHIR has since grown bigger and stronger, with its fifth major version released earlier this year. Yet more important than the blossoming of the specification is how the healthcare IT community has embraced it. FHIR accelerators emerged to solve real-world problems with FHIR; the federal government began to push for FHIR adoption. This is a major shift from the old way, where different standards were developed and recommended for specific use cases. Instead, FHIR has become the foundational standard for healthcare interoperability, poised to one day replace narrower frameworks like HL7v2 and DICOM.

It gets personal (and regulated)

FHIR hasn't been the only thing heating up over the past 10-ish years. Notably, interoperability has evolved to become more patient-centric. Blue Button launched in 2010 with the goal of empowering patients' access to their health data. Championed by both the VA and CMS, Blue Button has unlocked veterans' health data and Medicare beneficiaries' claims data via APIs. CMS went further in 2020 when it finalized the Patient Access Rule, which required affected payers to release FHIR R4 APIs. This opened the door for developers to build patient-facing apps powered by members' clinical and claims data.

CMS hasn't stopped there. Late last year, the agency proposed expanding API requirements for many payers by mandating support for electronic prior authorization, the ability for payers to exchange data on a member, and the ability for providers to request member data individually or in bulk. Yet again, as proposed these APIs would all need to be FHIR R4 APIs. Not to be outdone, ONC has been more focused on interoperability since requiring patient-facing APIs in 2015. The highlight has been USCDI, which is like a baseline for interoperability nationwide. Under USCDI, certified EHRs must support exchanging select data elements through FHIR R4 APIs. The beauty is that new data elements are added to USCDI each year, thereby raising the bar for interoperability on a regular basis once ONC completes the necessary rulemaking.

These regulations demonstrate how the federal government has become more assertive in moving the needle for interoperability. Rather than waiting for the industry to voluntarily embrace FHIR, the government has made it a required standard on multiple occasions. Rather than relying on private certification programs, such as the HIMSS maturity models, to incentivize the availability of APIs and the data they support, the government has mandated modern technologies and an ever-expanding scope of interoperability. This would have never happened 15 years ago, when technology was less ubiquitous and interoperability looked much different.

Marching on

The context of the past informs the dreams of our future. In merely a dozen years, we've seen a transition from individual standards to the broad-based FHIR, and we've seen government play a more active role in policing technology and patients' access to their data. I hope you've enjoyed this brief history; it makes me appreciate all the people who have worked tirelessly on interoperability since before I was born.

Now, I've used this series of posts to focus on the past, not the future. What lies ahead? Humans are incredibly bad at predicting the future—thus, I won't add to that miserable record. I will, though, highlight the potential of TEFCA. Once fully implemented with live QHINs, TEFCA will provide us with a network-based approach to interoperability from coast-to-coast. Rather than repeatedly building individual connections, systems will be able to route data wherever it needs to go by leveraging existing digital highways. I'm eager to see if TEFCA turns data exchange over networks into a popular choice; if so, this would truly be a milestone in the evolution of interoperability.

---

Photo by Edward Howell on Unsplash